This Db2 12 technical overview presents in an “easy to read” table list a review of new Db2 12 features

Have you encountered any other Db2 12 changes you’d like to discuss?

Now that Db2 12 has gone GA I can finally talk about it. So here’s another new Features “first look” at what I think is cool, great, or odd !

This is my personal list for a Db2 12 technical overview – in no particular order :

- Db2 12 SQL Optimizer, triggers, Arrays, Merge, UNICODE Columns. Temporal, SQL pagination, SQL Stability, Log, Partitions,…

- Data Sharing

- Utilities DSN1COPY, Alternate Copy Tools, Audit, REORG, PBG reorgs, COMPRESSRATIO, RELOAD, RO tablespaces, LOAD, BACKUP and Recovery, PiT, RUNSTATS…

AGILE This release of Db2 will be ”the last” release, as Db2 Development has gone all agile on us and will be doing Continuous Delivery (CD) from now on. CD promises Easier, Cheaper, Faster and Simpler Db2 maintenance and the quick realization of new functionality.

Db2 12 – SQL

Optimizer

MQT or Table expression columns are “trimmed” if they are not used in the outer query.

In LEFT OUTER JOIN, if columns are not used, they can be Pruned.

UNION ALL gets major work when pushing down join predicates as well as pushing down ORDER BY and FETCH FIRST

Outer table joins can get reordered to avoid unnecessary materializations

User-defined functions get two improvements with merge and the introduction of indexes on the join or correlation predicates that are passed in as parameters

Adaptive Index is designed for Multi Index and single index list prefetch to determine at execute time the filtering of each index. This ensures the optimal execution sequence of indexes or, perhaps, a quicker fallback to Tablespace scan if no filtering index exists.

TRIGGERS

The new “advanced” triggers enable SQL and Global variable usage and SQL PL.

ARRAYS

Get a couple of nice new features, specifically the use of a global variable as an array type and the ability to use the ARRAY_AGG without forcing an ORDER BY.

GLOBAL VARIABLES

Get LOB support and in a SET they can be the target.

PureXML

The XMLMODIFY can do multiple document updates in a single invocation. Various XML performance boosts are also included, e.g. XMLTable and the XSLTRANSFORM allows transformations to different formats.

JSON

When using the JSON_VAL function the first argument must not now always be a BLOB. It can be a view, CASE, table expression, trigger transition variable or SQL PL variable or parameter.

MERGE

Is now a full MERGE with the ability to use table references with multiple MATCHED clauses, including DELETE operations.

SQL PAGINATION

The ability of Db2 to “understand” typical paging has been greatly boosted. Typically it was always coded like:

SELECT blah blah blah

FROM mytable

WHERE (SURNAME = ‘BOXWELL’ AND FORENAME > ‘ROY’)

OR (SURNAME > ‘BOXWELL’)This is pretty horrible for the Db2 optimizer but we *all* know what we really mean! Now in Db2 12, so does the optimizer! Sadly you must rewrite your queries a little so this example becomes:

SELECT blah blah blah

FROM mytable

WHERE (SURNAME, FORENAME) > (‘BOXWELL’, ‘ROY’)

Also with this comes a nice little feature called OFFSET ROWS. Typically, this is for when the connection to the server is a bit shaky and so after some paging, when the cursor is reopened, the code “knows” it can miss the first 60 rows, so the cursor changes to be:

SELECT * FROM mytable OFFSET 60 ROWS

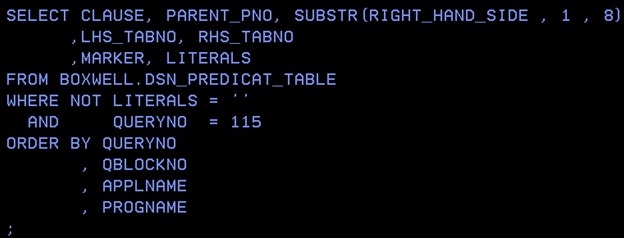

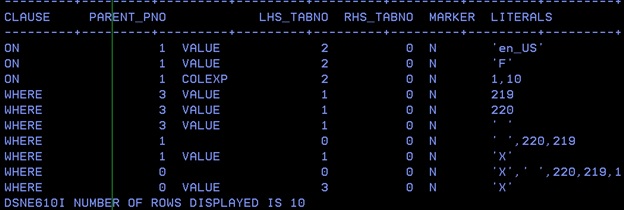

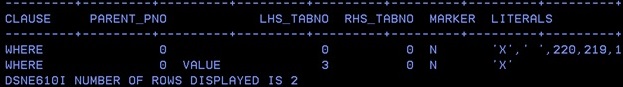

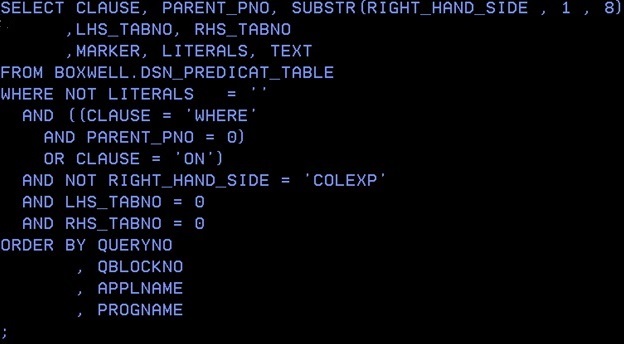

Nice feature, but beware of polluting the DSC! It is much better to use a parameter marker for these Offsets!

UNICODE Columns

In DB2 11, we got a “fix” for UNICODE columns that was really a “crutch”. This has now been fixed with real UNICODE columns in DB2 12. You must migrate your existing data though!

Piece-wise DELETE

This is a feature I have wanted for decades! Simply add the FETCH FIRST nnnn ROWS ONLY within a DELETE and then programmatically loop around until you are done. Much easier than the method we have today of DECLAREing a CURSOR with an UPDATE of a dummy column and the DELETE WHERE CURRENT OF and after 5000 or so issue a COMMIT.

TEMPORAL RI

You can now add RI as normal and not be forced to use a trigger or stored procedure.

TEMPORAL TABLES

Get the ability to not just be inclusive-exclusive but also inclusive-inclusive.

TEMPORAL Logical Transactions

Another new feature with temporal tables, is the ability to support logical units of work for SYSTEM_TIME. These logical units of work are not determined by COMMIT or ROLLBACK but by using a built-in Global Variable.

PERCENTILE functions

Two new functions PERCENTILE_CONT and PERCENTIL_DISC are new BIFs.

DRDA Fast Load

Is the ability to load data into z/OS DB2 from files sitting on distributed clients.

ODBC

Gets a new INI keyword KEEPDYNAMIC and the connection attribute of SQL_ATTR_KEEP_DYNAMIC.

Obfuscated Code

Mainly of interest to Vendors is the ability to hide your stored procedure, TRIGGER or UDF coding from prying eyes.

RLF for Static SQL

This is a big one! The Resource Limit Facility has always only been available for dynamic SQL. Now you can also use it to cap Static SQL.

TRANSFER OWNERSHIP

This is a very handy way of clearing out all the old owners from a DB2 system.

SQL Stability

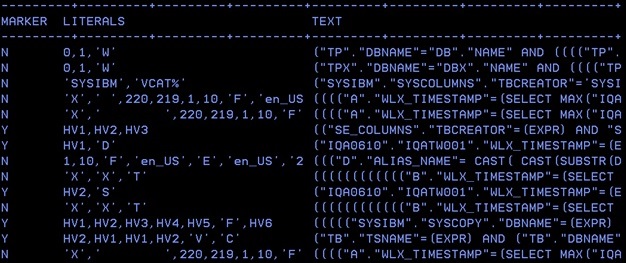

Dynamic Plan Stability is nearly the same as BIND QUERY, but the hope is that it will be easier and better to use! But beware of saving all of your dynamic SQL away!

Static Plan Stability gets a good enhancement that allows FREE on the original or previous. What is really good, is that the current version can be in use so there is no application outage anymore.

Insert

New Insert algorythm can be used for faster unclustered insert processing in some cases. Only for UTS MEMBER CLUSTER (This is actually the default for these spaces).

CONCENTARTE LITERALS

Now supported at the Package Level.

FTB

Fast Index Traversal – Especially good for randomly accessed indexes. If the index is unique, and 64 bytes or less, it is eligible. Index is controlled with the new Catalog table SYSIBM.SYSINDEXCONTROL and the -DISPLAY STATS(INDEXMEMORYUSAGE) command.

Log

Active log size can go from 4GB now up to 768GB ! Be careful here!

In-Memory bufferpools

by using PAGESTEAL(NONE) keyword.

PARTITIONS

Finally we get the chance to give each partition its own DSSIZE as well as the Partitioning indexes! This is great, but sadly is only available to an existing space once you have reorged the whole tablespace…However, once you are there, you can then have data and index parts up to 1TB in size, plus, when you do an ALTER of the DSSIZE, it does not cause an outage (as long as you make it bigger!). A side effect of this is that the RID is now seven bytes (see REORG mapping table for other changes). You can now also add partitions in the middle of an existing PBR table.

Data Sharing

Recovery

of retained locks from a failed member can be handled automatically

LPL and GRECP recovery

LPL and GRECP recovery auto retries three times after waiting three minutes

Db2 12 Utilities

DSN1COPY

In DB2 11 this utility got a few sanity checks and now the REPAIR CATALOG utility can fix some of these. The REPAIR CATALOG TEST also looks for some problems caused by misuse/abuse of DSN1COPY.

ALTERNATE COPY POOLS

The usage of BACKUP SYTEM is growing. So is the amount of storage required! The idea here, is to define a set of copy pools, but only one for many DB2 subsystems. The alternate copy pool uses as many volumes as it needs and leaves the other volume free for a different subsystem backup. This reduces the amount of space that must be allocated.

Audit

A new Authorization arrived: UNLOADAUTH to “replace” the “Does the user have SELECT auth on the table?” check that has run up to now. UNLOAD is special and should be controlled over this auth and no longer over just SELECT.

REORG

PBG tablespaces get the best news here!

PBG reorgs can now spill over into a new PBG if the row(s) do not fit back into the original partition. Classic case here, is compressed data that no longer fits back. This forced people to use a TS level reorg or not use compression.

If the PBG contains LOB data and it extended to a new partition in the log apply phase, then the LOB space was left in COPY Pending… pretty horrible and that no longer happens in DB2 12.

Another PBG bonus, is the delete of “emptied” Partitions after a REORG has completed.

Improved FlashCopy support – You can now decide to stop the REORG if the flash copy fails.

New Catalog column COMPRESSRATIO for use by utilities that records the compression savings at the record instead of at the page level.

RELOAD phase can now be offloaded to zIIP.

RO tablespaces can now be REORGed at any SHRLEVEL.

The mapping table gets changed again due to the relative page numbering in the new PARTITION support (seven byte RID).

LOAD

PART REPLACE with dummy input against an empty (PBR) partition could be quicker.

LOAD SHRLEVEL CHANGE PARALLEL support for PBG for SHRLEVEL CHANGE.

Additional zIIP offload, like in REORG, in the RELOAD phase, including the data conversion and loading of the record into the page set.

LOAD RESUME BACKOUT YES to avoid RECP on failure. Adds a new option on LOAD RESUME SHRLEVEL NONE to allow LOAD to back out the rows already loaded upon encountering an error (such as conversion, LOB/XML, duplicate key, referential integrity violation) without leaving the page set in RECP.

PREFORMAT support for auxiliary tables. Support is extended to LOB table spaces and auxiliary indexes.

Maintain MAXASSIGNEDVAL for identity columns. LOAD now maintains the MAXASSIGNEDVAL for user-provided input and resets the value if a LOAD REPLACE is run on the table space.

LOAD REPLACE support for the COMPRESSRATIO column for use by utilities that records the compression savings at the record instead of at the page level column.

BACKUP and RECOVERY

Point-in-Time support for PBGs, Flashcopy FLASHCOPY_PPRCP keyword. As mentioned the default is changed to not recover unchanged objects. MODIFY RECOVERY gets two new options: DELETEDS to delete the datasets and NOCOPYPEND to not set COPY pending after doing the MODIFY.

PiT

Has been improved with the ability to skip unnecessary recoveries. SCOPE UPDATE only processes objects that have been updated up to the TOLOGPOINT or TORBA.

RUNSTATS

New CLUSTERRATIO formula which should better reflect dynamic prefetch. Terry Purcell has stated that it is not a huge change and does not require a RUNSTATS of all tablespaces!

FREQVAL COUNT nn

The COUNT nn is now optional and, if not used, then RUNSTATS will work out the best number for you. This is really, really nice and I would recommend this in an instant! It has also been retro fitted to DB2 11.

Autonomic Statistics with PROFILEs

I am no fan of this, as I believe it makes for a pretty nasty feedback loop where anyone’s “dumb” QMF/SAS/DSNTEP2/SPUFI will get inserted as a PROFILE COLGROUP, and then these PROFILEs will get bigger and bigger until no-one knows which are really useful and which are just fluff! I would recommend setting the ZPARMs STATFDBK_SCOPE to ALL (Default) STATFDBK_PROFILE to NO (Default is YES). When YES is used DB2 12 will create and/or maintain a PROFILE for you. Finally, validate that the SYSTABLES column STATS_FEEDBACK is set to “N” (Default is “Y”) for any and all tables where you do *not* want SYSSTATSFEEDBACK data. E.g. All the DSNDB01 tables where a RUNSTATS is not even allowed!

DSC

DSC Invalidation got switched off by default. In the past *any* RUNSTATS flushed the cache. Now you must add the key word INVALIDATECACHE YES to get this to occur. (Unless you use the REPORT NO UPDATE NONE syntax this still just flushes the DSC)

Inline Stats

Inline Stats got a huge boost with PROFILE support, MOST/BOTH/LEAST and LOAD PARALLEL got inline stats.

Have you encountered any other Db2 12 changes you’d like to discuss?

As usual, feel free to email me with questions or comments.

TTFN

Roy Boxwell

5