In collaborazione con Expertise4IT

Con il package di salvataggio globale delle RUNSTATS implementato da SEG: Per quale ragione questa buona idea in qualche caso fallisce?

• La storia

• Cos’è accaduto realmente?

• Come risolvere il problema?

• Rescue Steps

• Un esempio (comprensivo di pannelli JCL)

• Il prossimo mese

Questo mese vi voglio raccontare una storia. La storia è vera, ma I nomi sono stati cambiati per proteggere gli innocenti

La storia

Una grande azienda schedula regolarmente la fasatura dei processi di produzione nella notte di Giovedì. Lo scorso anno, una notte tutto procedeva regolarmente ma … al Venerdì mattina …….…i telefoni cominciarono a squillare perchè gli utenti si lamentavano di tempi di risposta lunghi o nulli (timeout) in un’applicazione piuttosto critica per il business.Il problema rapidamente evolve dai normali ‘spegnete e riavviate’ e ‘avete cambiato qualcosa?’ ai senior managers che chiedono per quale motivo le cose non funzionano più. |

Allo stato il Gruppo DBA non era stato ancora coinvolto, dal momento che il primo pensiero era che si trattasse di un “bad” package passato in produzione accidentalmente nella notte.

- Il Gruppo di gestione della produzione, allora, riportava indietro I packages in gioco, ma l’operazione non produceva effetti….

- I ritardi di consegna diventavano peggiori. Vengono quindi ‘stoppati’ quasi tutti i server WebSphere, almeno per permettere che un pò di lavoro venisse eseguito sul sistema sovraccarico. Essendo ormai giunti al livello di panico, i DBA and il team JAVA vengono coinvolti.

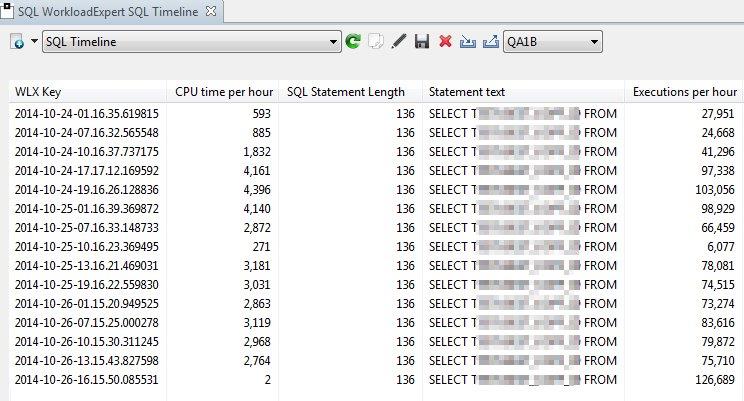

- E questi trovano rapidamente il colpevole; si trattava di un SQL dinamico molto complesso che si era ‘comportato’ correttamente e con efficienza fino ad un certo istante della notte di Giovedì, e adesso performava in maniera disastrosa.

- I DBA riorganizzano le tabelle più consistenti fra quelle in gioco, nella speranza che le cose vadano meglio, ma, purtroppo, non ci sono risultati apprezzabili….

Finalmente il DBA propone la creazione di un indice con relative RUNSTATS in produzione.

| L’access path si modifica sostanzialmente e le performance tornano ad essere buone. I server WebSphere vengono restartati e, gradualmente, si torna alla normalità. Tutto questo processo é durato 2 giorni! L’azienda deve rifasare la sua catena logistica e le consegne Just-In-Time, quindi il blocco ha avuto qualche seria ripercussione, ovviamente…. E questa è tutta la storia |

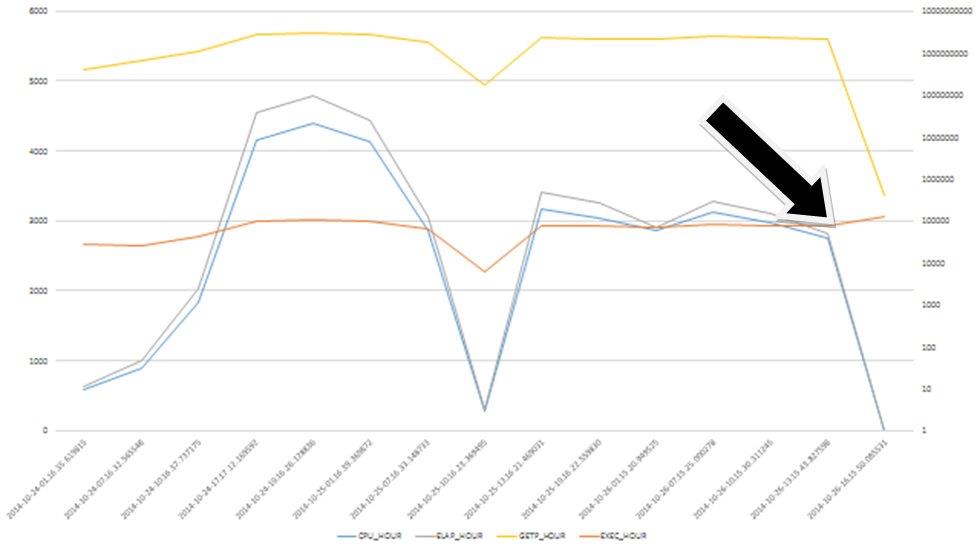

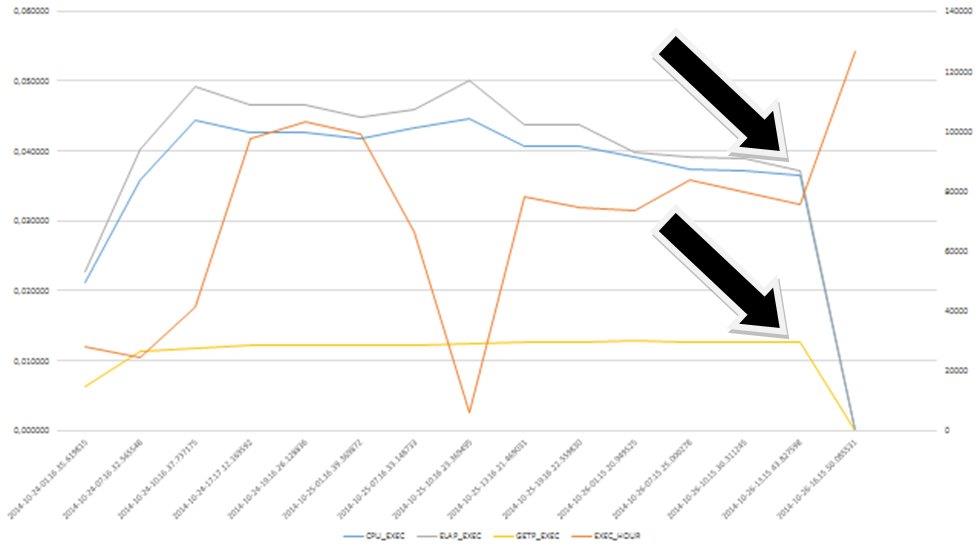

Cosa è realmente avvenuto?

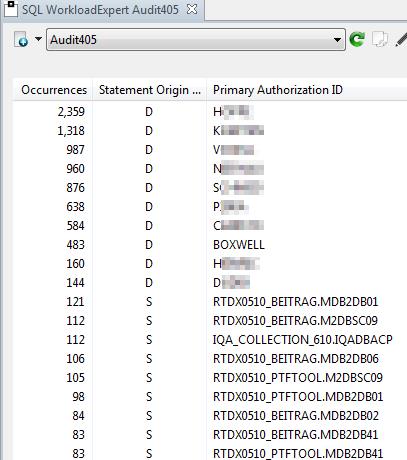

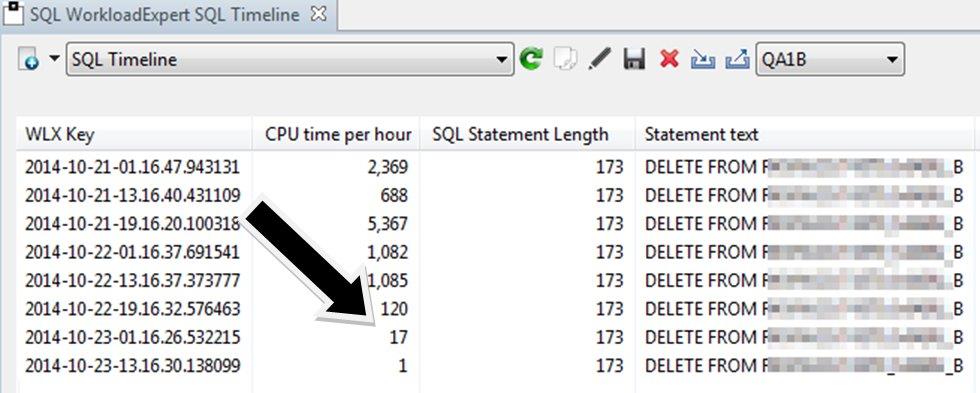

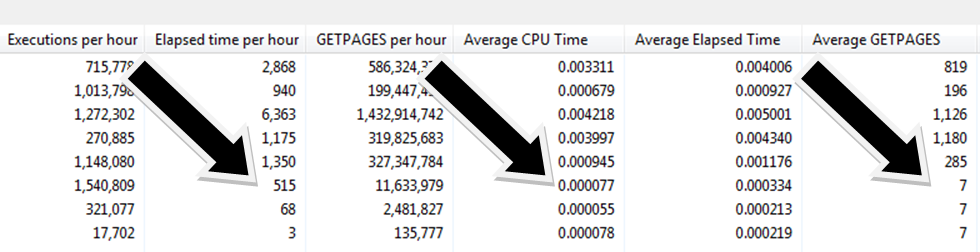

Il DBA, investigando sulle possibili cause del blocco, ha scoperto che ciò che realmente era accaduto, era che era andata in macchina una RUNSTATS solo su una piccola tabella che, fatta girare durante la notte di Giovedì in un momento inopportuno, aveva determinato un passaggio dell’access path allo stato di “pear-shaped”(aka Belly Up) per tutta la giornata di Venerdì e per metà del Sabato…

Come risolvere rapidamente e facilmente il problema?

Il DBA pensò ai modi in cui il problema, che avrebbe potuto riproporsi in futuro, potesse essere risolto in maniera facile e rapida. E qui comincia la mia parte della storia…

Questa azienda utilizza software proprio e possiede una licenza d’uso della componente Enterprise Statistics Distribution (ESD) del Bind ImpactExpert, che estrae, e opzionalmente converte, tutte i dati di catalogo di cui ha bisogno l’ottimizzatore per fare il proprio lavoro. Normalmente, I clienti utilizzano questo tool per copiare le statistiche di produzione in stile ‘sand box’, per vedere se un APAR o una migrazione di DB2 può causare problemi imprevisti. Per questo motivo, essi usano la componente Early-PreCheck del nostro tool Bind ImpactExpert per SQL statico e dinamico. Adesso facciamo un altro scenario, chiamato DSC (Dynamic Statement Cache) Protection, che dovrebbe realizzare quello che desidera il cliente, ma fa anche molto altro e, ovviamente, ha costi più elevati!

Ed è così che nacque l’idea di un tool ‘tascabile’ che abbiamo chiamato RUNSTATS Rescue. Ho sentito che chiedevate “Perchè si chiama PocketTool?”; la risposta è: “Perchè costa quanto il denaro che si tiene in tasca!” (aka Pin Money negliUSA o una “allowance” se preferite). Questi tools sono realmente molto economici!

Adesso, prima che smettiate di leggere questa newsletter e cominciate a lamentarvi del fatto che si tratti di una pura attività di marketing, per favore mettetevi in testa che quanto io descrivo in questo documento potrebbe anche essere stato scritto da voi e, quindi, avete solo bisogno di darmi credito sufficiente per condividere l’idea…

RUNSTATS Rescue

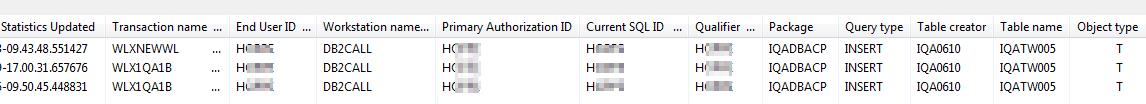

L’idea è usare l’EXPLAIN in qualunque modalità, shape or form, sia in SPUFI, che direttamente all’interno di qualsiasi monitor, semplicemente per ‘EXPLAINare’ l’SQL critico, e tenere a mente quale PLAN_TABLE owner state usando e, anche, il QUERYNO che avete appena usato.

Usando questi due inputs, RUNSTATS Rescue analizza l’output dell’ EXPLAIN per costruire una lista di control cards di estrazione e update per il nostro tool ESD, per tutte le tabelle usate e per I relative indici –anche se, ovviamente, non in uso! Infine viene anche generato un flusso di RUNSTATS DSC per tutti I tablespaces coinvolti nella query al fine di avere la certezza che la prossima volta che lo statement critico andrà in macchina, userà le corrette statistiche.

Ora, ovviamente, sorge la domanda: “Come faccio a sapere quail sono le statistiche da usare come Rescue statistics?”

La risposta è: “Quelle che c’erano prima prima che venisse eseguita una REORG con inline statistics o una RUNSTATS “. Questo è il punto chiave da tenere a mente: dovete semplicemente eseguire lo ESD extract prima che venga eseguita qualunque operazione di maintenance sul Database.

Molti siti hanno giorni, o weekends, in cui girano le procedure di maintenance, e non è certo un problema estrarre I dati e salvarli, per esempio,in un GENGROUP, al fine di facilitare l’individuazione di data e time in cui le statistiche erano ‘buone’ e poi, con il job di RUNSTATS Rescue, ripristinare molto rapidamente le statistiche necessarie. Questo consente al gruppo DBA di avere più tempo per capire cos’è realmente successo e apportare le giuste correzioni, quantomeno rimanendo a proprio agio.

10 Rescue Steps

- 1. Selezionare il nuovo scenario di RUNSTATS Rescue

- 2. Generare JCL

- 3. Opzionalmente copiarle in GENGROUP dataset

- 4. Inserire EXPLAIN TABLE-CREATOR e QUERYNO

- 5. Lancio automatico del nostro browser di catalogo

- 6. Drill down fino al livello degli indici

- 7. Chiedere un “new” file name per le “rescue” statistics estratte

- 8. Eseguire l’estrazione delle RUNSTATS Rescue

- 9. Resettare le statistiche ed eseguire le RUNSTATS

- 10. Le “Rescued” Statistics

Di seguito un esempio guidato di cosa avviene in realtà:

In basso nella schermata, si può osservare il nuovo scenario delle RUNSTATS Rescue – Selezionandolo si attiva una finestrella con tre passi. Il primo deve essere eseguito solo una volta e va semplicemente inserito in un job produttivo esistente. Si raccomanda di farne il primo job della normale catena di maintenance del DB2 Database Maintenance.

1 – Select the new scenario RUNSTATS Rescue

2 – Generate some JCL

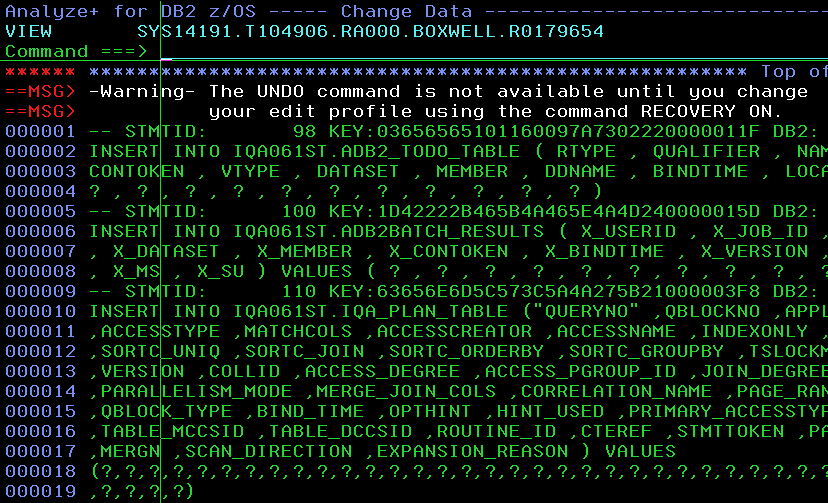

La prima opzione genera alcuni JCL come sotto illustrato:

3 – Opzionalmente copiare in un GENGROUP dataset

Esempio di step finale di copia su GENGROUP

4 – Insert the EXPLAIN TABLE-CREATOR and QUERYNO

Selezionare il secondo passo per preparare le RUNSTATS Rescue

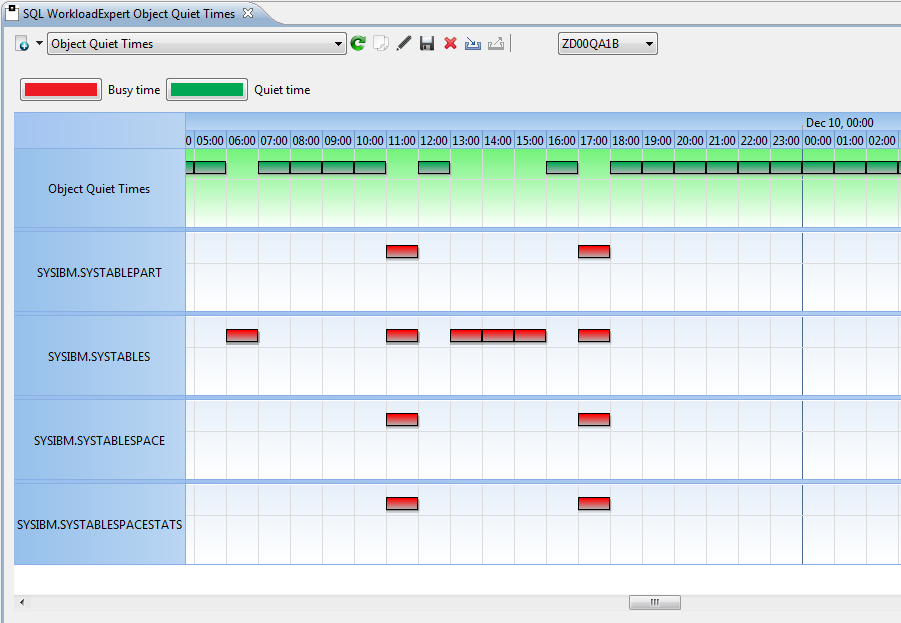

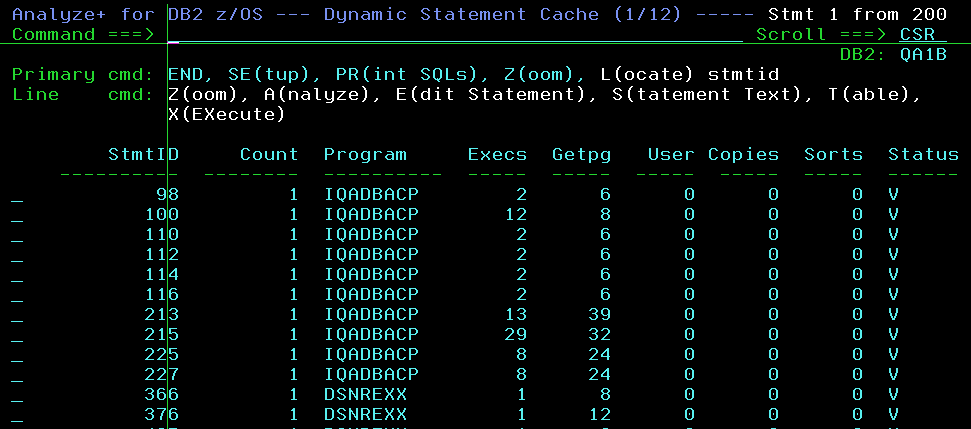

5 – Lancio automatico della scansione di catalogo

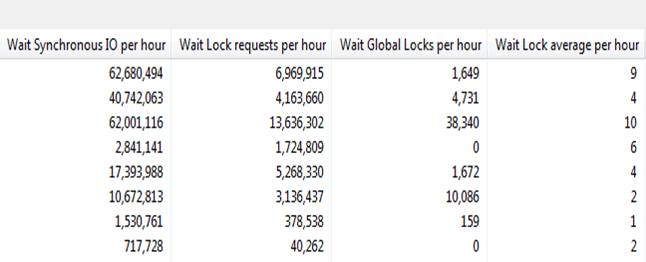

Premendo “enter” si lancia il browser sul catalogo per consentire la vision degli oggetti usati dallo SQL…..

6 – Drill down to the Index level

…con I relativi indici, ovviamente!

7 – Ask “new” file name for the extracted “rescue” statistics

Uscendo con PF3 il tool chiede il dsname delle Production Statistics originali, come estratte dal job del primo passo, e un “new” file name per le rescue statistics estratte:

8 – Perform the RUNSTATS Rescue extraction

Il JCL che esegue l’estrazione delle RUNSTATS Rescue, incluso il passo opzionale su saving su GDG, si presenta come sotto:

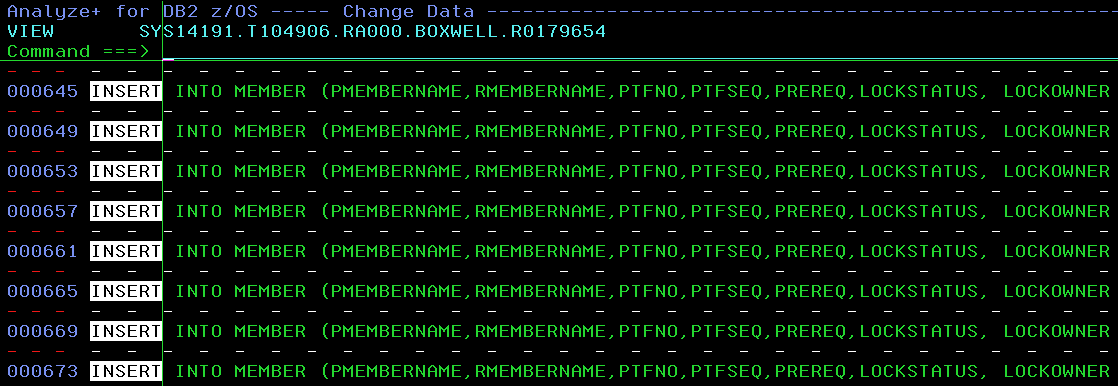

9 – Reset the statistics and executes the RUNSTATS

Infine, viene selezionato il terzo step, che azzera le statistiche ed esegue la RUNSTATS che provvede al flush della DSC

10 – The “Rescued” Statistics

Adesso, la prossima volta che lo statement apparirà, userà le “rescued” statistics e riprodurrà il vecchio Access Path.

Nel prossimo mese

Nel prossimo mese desidero espandere la problematica trattata con la possibilità di estenderne la soluzione allo SQL statico.

Il mese successive, scenderò nei dettagli della ‘DSC Protection scenario’ che ho citato prima. Non si tratta di un tool tascabile, ovviamente, ma è molto interessante!

Come sempre questions or comments sono benvenuti,

TTFN Roy Boxwell

Senior Software Architect